The Rise of the Igonfluencer

How AI is making it easier to sound smart and harder to think deeply.

Do you ever read the footnotes? That’s where I share personal thoughts, some book recommendations, and the occasional random comment. And if you're planning to scroll down there, might as well hit the like ❤️ while you're at it.

It began with a typo.

Not just any typo but one from a world-renowned bestselling author. His gift is simplifying complex ideas.

While quoting Nassim Taleb1, the mathematical term “eigenvalue” mysteriously (or erroneously?) became... “igon value”.

Psychologist Steven Pinker, flipping through the book2, spotted it: “Igon value? That’s not a concept,” he must have muttered and then wrote a takedown review3:

[He] frequently holds forth about statistics and psychology, and his lack of technical grounding in these subjects can be jarring.

Ouch. But it didn’t stop there:

When a writer’s education on a topic consists in interviewing an expert, he is apt to offer generalizations that are banal, obtuse or flat wrong.

So much drama… I know!

Steven Pinker called this the Igon Value problem - when someone sounds like an expert without actually being one.

The phrase spread quickly among academics, back when “going viral” wasn’t even a thing.

But Tapan, who was the world-renowned bestselling author?

Malcolm Gladwell.

Yes, that Malcolm Gladwell. The man who gave us Outliers, The Tipping Point, and Blink. The maestro of pop-science and narrative nonfiction4.

But what once felt like a rare mistake now feels familiar.

The Problem Isn’t New. But It’s Now Everywhere.

Pinker’s critique wasn’t cruel. It was a mirror, a warning about the growing gap between knowing something and truly understanding it.

Today, the Igon Value Problem isn’t just a typo in a bestseller. It’s a feature of everyday life.

You see it on LinkedIn, where thought leaders “breakdown” complex topics after a single podcast. Then you hear it in podcasts, where hosts “go deep” on subjects they discovered last Tuesday. And those hosts read it in a newsletter from a writer, who discovered the topic just one month ago5.

On Twitter, I have watched the same person become an overnight expert on aviation in India, the Iran-Israel conflict, the Gaza war, U.S. immigration, climate change, and South African cricket strategy. And that was just June!

A stupid man's report of what a clever man says is never accurate because he unconsciously translates what he hears into something he can understand.

Bertrand Russell

On social media, that distortion multiplies fast.

A shallow but catchy idea gets thousands of likes, mostly from those who don’t know enough to spot the flaw. The feedback loop is instant. Praise inflates confidence. And confidence further masks the shallowness.

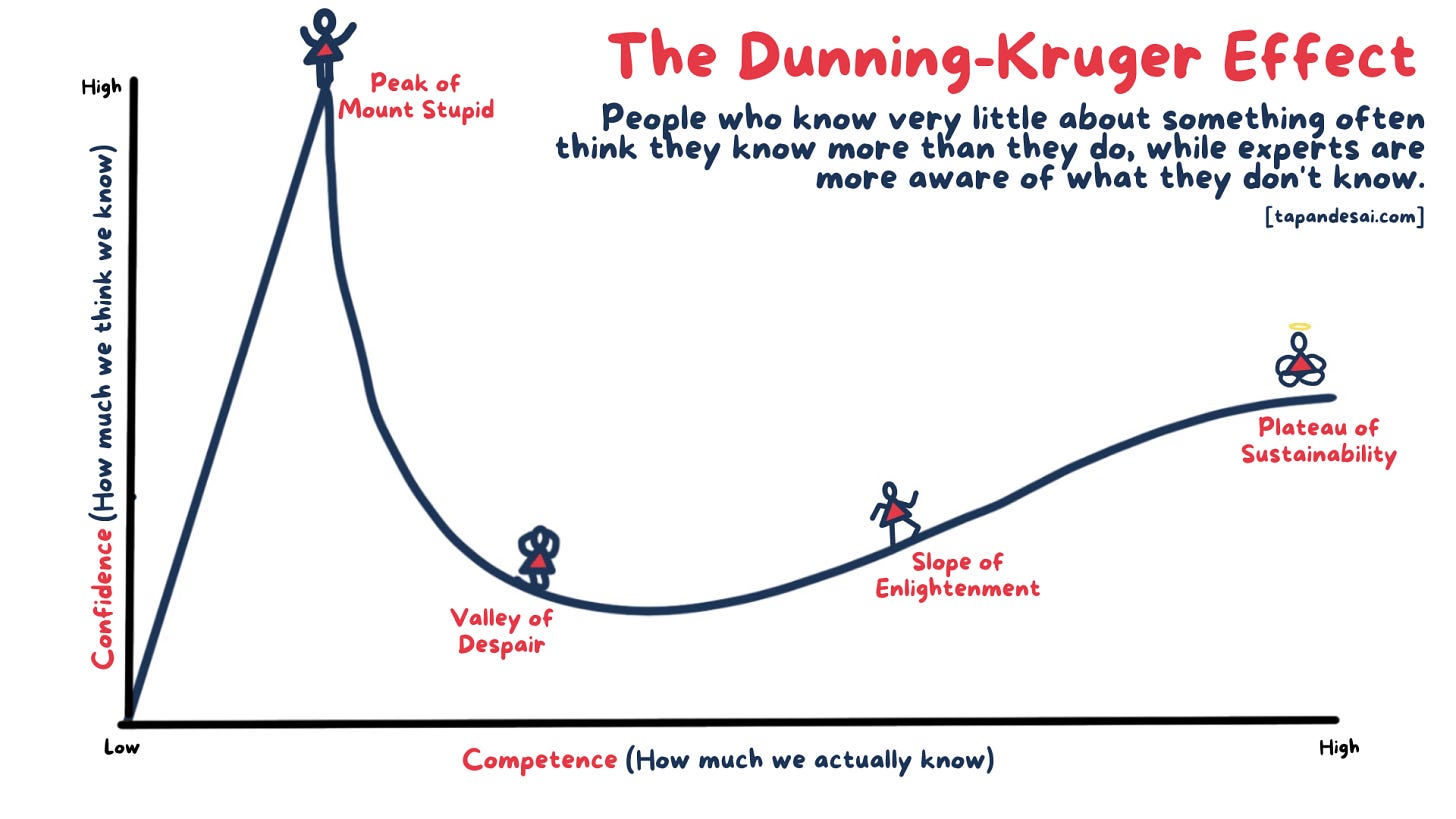

It’s Dunning-Kruger on steroids: Those with basic knowledge act like experts, while the actual experts, aware of the nuance, speak with hesitation or get drowned out.

We live in the golden age of confidence without competence.

It’s never been easier to sound smart without being smart. And the rise of AI has supercharged this dynamic.

🚨 Quick sidebar: Enjoying what you are reading? Sign up for my newsletter to get similar actionable insights delivered to your inbox.

Pssttt… you will also get a copy of my ebook, Framework for Thoughts, when you sign up!

The Age of Instant Expertise

Knowledge used to be earned the hard way, through books, mentors, mistakes, and time.

Now, we outsource it.

We Google. We ask ChatGPT. We skim summaries and swipe through threads.

And it feels like learning.

But there’s a difference between accessing information and actually understanding it.

Between watching a YouTube summary6… and doing the mental reps yourself. Between tweeting an answer and knowing what it means.

It’s the illusion of mastery7: the moment when familiarity feels like expertise.

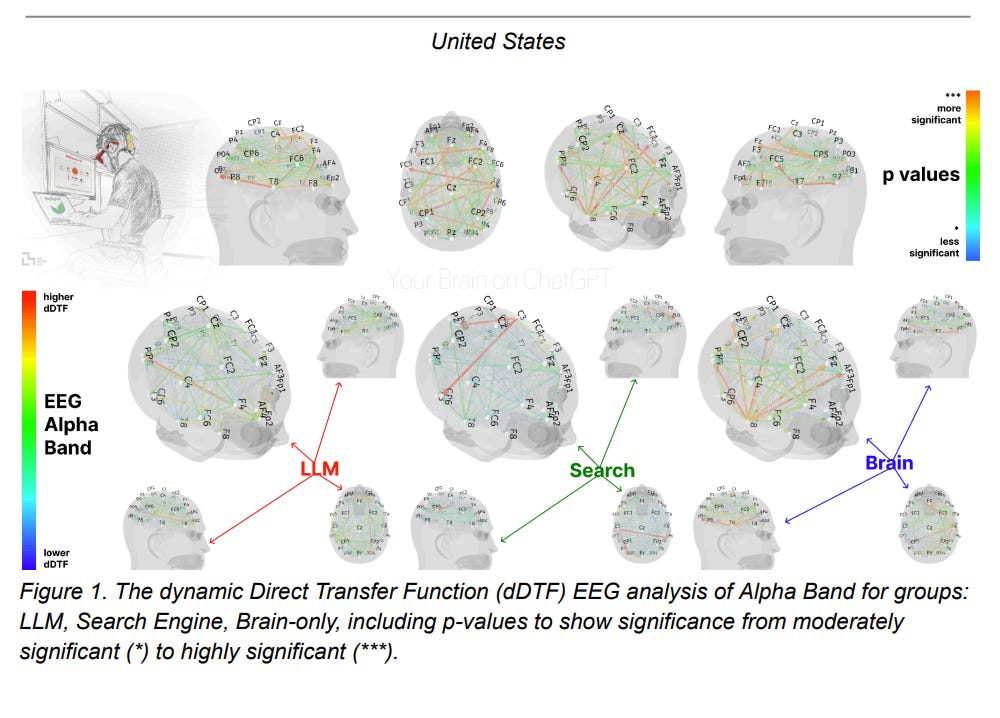

If you have followed AI news lately, you may have seen the MIT study making the rounds8. It found something striking.

Students who used AI to write essays had homogeneous sentences and significantly quieter brains. On average, brain activity dropped by 55%.

Researchers called it “robust” evidence. The more people relied on AI, the less their brains engaged.

The term is cognitive offloading - outsourcing your thinking to a machine. It feels smart. It feels fast. But it slowly erodes the muscle we need most: the ability to think deeply.

Today with ChatGPT, looking like an expert is easy. Understanding like one isn’t.

It ain’t what you don’t know that gets you in trouble. It’s what you know for sure that just ain’t so.

Fittingly, the MIT study went viral through a Twitter thread9 from a person “breaking down the latest MIT paper and it’s terrifying”.

This is what I call... an Igonfluencer.

The Rise of the Igonfluencer

You have seen them.

They post slick carousel threads on AI, productivity, or business models. They compress complex frameworks into bite-sized summaries, wrap them in minimal visuals, and title them something like, “7 AI tools that will 10x your career” on LinkedIn10.

They are confident. Crisp. Always one step ahead… just enough to sound like an authority.

But beneath the polish lies a quiet irony: in an age overflowing with information, deep knowledge is harder to find.

We are surrounded by content, yet starved for clarity.

It’s not that we lack experts. But we are drowning in shovel-sellers, people who don’t dig deep themselves, but sell tools to others who want to.

This isn’t entirely new, armchair experts11 have always existed.

What’s changed is the velocity and the reach. A curious person can skim a few blog posts, watch a YouTube breakdown, and enter a conversation sounding informed.

In a world where surface-level insight gets more applause through likes and upvotes than years of earned wisdom, the incentive to go deeper is gone.

Instant validation today beats long-term understanding.

That’s the real risk, not the rise of novice voices but the illusion that a quick take is the same as real expertise.

But it’s not all bad.

Knowledge Has Been Democratised

So, is the rise of AI and ubiquitous information a net negative for deep knowledge? Not quite. There’s nuance here12.

The truth is, something remarkable is also happening.

Just this June, I got to ask tough questions to a solicitor about the UK Building Safety Act. I dug into how tariffs work. I did some research on automated decision-making in EU countries. And I was able to publish my first children’s book.

None of that would have happened without this new world of access, AI.

A kid from an Indian town, now in a foreign country with nothing but the internet and a ChatGPT subscription, can read about laws, business models, and Kindle Direct Publishing, just by asking an AI to break it down.

The barrier to entry for learning has never been lower.

AI can personalise learning. It answers “dumb” questions without judgment. It acts like a mentor, at scale. It can even present multiple perspectives.

These are huge upsides.

Think of it like the Pareto Principle: 80%of ideas are now easily accessible to any person.

It’s the remaining 20% - the nuance, the depth, the repetition - that separates the curious from the truly knowledgeable.

The Real Igon Value Problem

In a world where AI can spin out clever breakdowns in seconds, where influence is built on threads, reels, and summaries, we are all at risk of becoming Igonfluencers.

Even the well-meaning ones. Especially the well-meaning ones.

The real danger isn’t that someone gets a fact wrong… it’s that they sound so right doing it. Just like Malcolm Gladwell13.

It’s the New Illusion of Mastery. So, what do we do?

We slow down.

We treat AI like a coach, not a crutch. We get an answer from AI and then we dig.

We make peace with saying, “I don’t know yet14”.

We remember that the deepest thinkers often sound uncertain, not because they are wrong, but because the only thing they are sure of is that they don’t know everything.

And, most importantly in a world that rewards quick summaries and fast breakdowns, we remember fluency isn’t mastery.

The Igon Value Problem was never just about a typo.

It’s about what happens when we stop thinking we might be wrong.

Until next time,

Tapan (Connect with me by replying to this email)

Thank you for reading! 🙏🏽 Help me reach my goal of 2,300 readers in 2025 by sharing this post with friends, family, and colleagues! ♥️

As an Amazon Associate, tapandesai.susbtack.com earns commission from qualifying purchases.

Yes, the Nassim Taleb - the author of Antifragile, Black Swan, and Fooled by Randomness.

The New York Times takedown article written by Steven Pinker. If you haven’t read this newsletter yet, it might be worth doing that first unless you prefer reading random articles without context.

Malcolm Gladwell already gets a fair bit of flak - from academics and blog writers who prefer storytelling to be in the footnotes. One such critique dubs his style “the tyranny of Malcolms”, referring to the now-ubiquitous trend of opening chapters with long, engaging stories that may or may not return later. I use the same style. I am a Malcolm.

The irony of this sentence is not lost on me.

Now you can use NotebookLM to summarise the YouTube summary for you.

One of my favourite quotes while researching this topic came from Bertrand Russell - “The fundamental cause of the trouble is that in the modern world the stupid are cocksure while the intelligent are full of doubt.” [source]

Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task. And if you are thick like me, here’s the summary for it. Yep, I did that.

This is from the book, Think Again by Adam Grant. I have covered it in a previous newsletter.

On a random side note: God, I miss nuance! Somehow, every expert online now talks in absolutes - it’s either “master this in 7 days” or “you are falling behind.” No one’s saying, “This might work for you... except for A, B, or C”. We have replaced thoughtful uncertainty. In real life, true expertise shows up as complexity. The more someone understands a topic, the more they present multiple sides, not just a single, shiny answer. But, unfortunately, nuance doesn’t go viral!

Do I hate Malcolm Gladwell? Not really. He made complex ideas feel simple and that’s no small feat. His storytelling pulled psychology and statistics out of research papers and into conversations. He also made space for a generation of curious minds, including mine.

Please just start saying “I don’t know”. You don’t have to know everything, all the time. You are not a search engine. Also, you don’t need to have an opinion about everything.

bhai No! Let me live in the delusion that I know some stuff and I can comment on them. I'm totally discarding your breakdown on this topic and the rational justification. Long live delulu xP

Ironically I just posted a post called "7 questions to rule them all"... I might be an igonfluencer, well but without the influence 😄.

More seriously thanks for you take, it is important to recognize where our expertise lies and where it doesn't, not misrepresenting that is essential as well as citing the original source. We might not get everything right when trying to summarize or vulgarize a topic, but the least we can do is to make it easy to be corrected.